What is Presence Penalty?

Presence Penalty is between -2.0 and 2.0 and it impacts how the model penalizes new tokens based on whether they have appeared in the text so far.

Positive values will increase the likelihood of the model talking about new topics by penalizing new tokens that have already been used.

The presence penalty is a one-time, additive contribution that applies to all tokens that have been sampled at least once, while the frequency penalty is a contribution that is proportional to how often a specific token has already been sampled. In general, the default value for Presence Penalty is 0 and it’s used when you want to generate text that is coherent with the input prompt, by using words that are present in the input.On the other hand, if you want the model to generate text that is less restricted by the input, you might want to use a higher presence penalty, this will encourage the model to generate novel words that were not present in the input, allowing for more diverse and creative output.

Adjusting the Presence Penalty

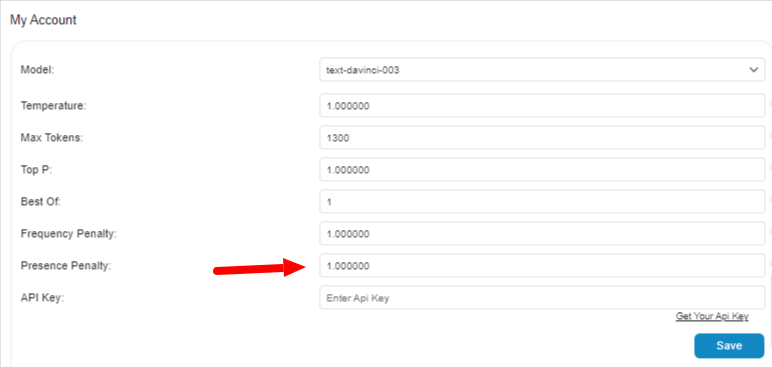

When our plugin is installed for the first time, it comes with 0.01 as the default Presence Penalty value. However you can change it from the Presence Penalty field in the Account tab. Here are the steps:

- Click on the Save button to save your changes.

Differences Between Frequency and Presence Penalty

The main difference between the two parameters is the way they modify the probability distribution of the model’s predictions.

Frequency penalty parameter modifies the probability distribution to make less likely words that the model has seen more frequently during its training. This encourages the model to generate novel or less common words. It works by scaling down the log probabilities of words that the model has seen frequently during training, making it less likely for the model to generate these common words.

On the other hand, the presence penalty parameter modifies the probability distribution to make less likely words that were present in the input prompt or seed text. This encourages the model to generate words that were not in the input. It works by scaling down the log probabilities of words that were present in the input, making it less likely for the model to generate these words that are already in the input.

To put it simply, frequency penalty penalizes the model for generating the common words that the model has seen a lot during training whereas presence penalty penalizes the model for generating the words that are present in the input text.

Both parameters can be used to increase the diversity of the generated text and to encourage the model to generate more novel or unexpected words, but they do so in different ways and depending on the use case and specific requirements, one may be more beneficial than the other, or they can be used together to control the diversity of the generated text.